We want to hear from you! Help us celebrate one year of The Leading Indicator by sharing your thoughts on the newsletter in this quick survey.

Three years after ChatGPT’s debut, the public discourse about artificial intelligence (AI) remains focused on cheating. Vox recently ran an advice column responding to a college teaching assistant who was overwhelmed by student pieces dripping with obvious hallmarks of chatbot use. The column adds to the long list of op-eds, news articles, podcast episodes, and Substack newsletters lamenting AI’s role in student cheating. Yet the field is missing the larger point highlighted in our last issue: When the assignment is shallow enough for a bot to fake, the problem isn’t just the tool — it’s also the task.

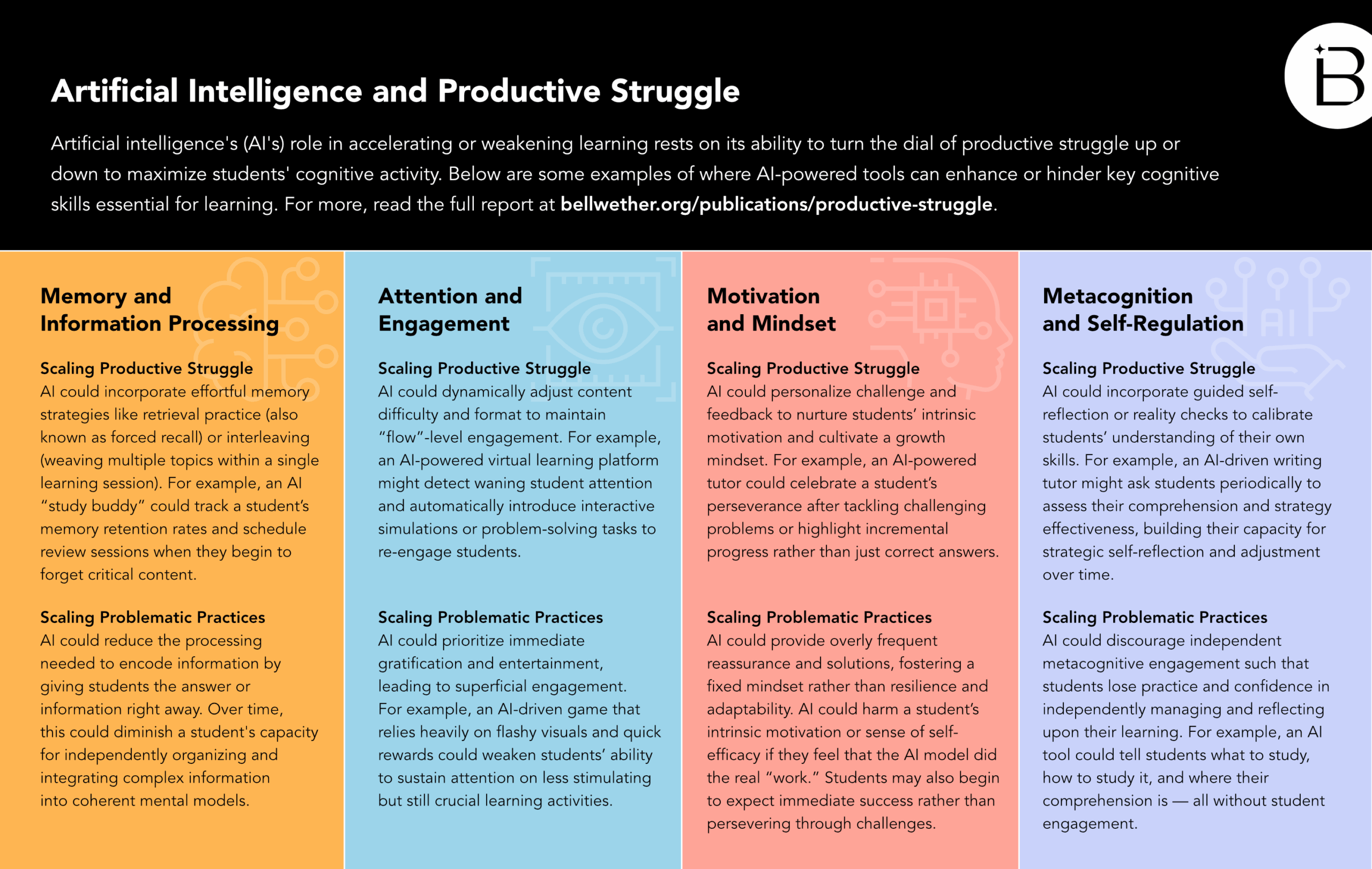

Enter Bellwether’s “Productive Struggle:” Cognitive science teaches us that students learn at the edge of what they know, not when they cruise through worksheet-level recall — or, worse, let an AI model do the recall for them.

Students need to struggle to learn. As a result, the stakes are higher than academic dishonesty. Students who become overreliant on AI tools that lack appropriate learning design are risking their cognitive abilities. When students depend on AI to write their essays, they miss out on developing essential skills such as brainstorming, research, critical thinking, analysis, and effective communication (recall this early MIT study going around; despite methodological shortcomings, it’s still driving this conversation). These are not one-and-done skills; they develop iteratively, through repeated attempts, across multiple contexts and subjects, with feedback and reflection over time.

So, is AI only good for letting students’ brains rot? No. AI tools, when intentionally and thoughtfully designed to maximize cognitive processes, could amplify productive struggle and accelerate learning for both students and teachers. Early research shows promise when AI acts as a tutor that refuses to give direct answers and instead prompts students to recall information and problem-solve; another study examining students’ use of Pearson’s AI tool found that when mapped to Bloom’s Taxonomy, about one-third of the queries reflected higher levels of cognitive complexity. These results highlight the potential for thoughtful design and intentional scaffolding from educators, leaders, and ed tech designers to use the power of AI to support deeper cognitive engagement among students (for any ed tech developers, this piece has some great suggestions).

Productive Struggle sketches some possibilities for AI to calibrate difficulty, surface metacognition, and incorporate more cognitive science. The takeaway for leaders: don’t pour energy into an arms race you can’t win. Instead, redesign assignments and tools so that AI helps students think more — not less.

Education Evolution: AI and Learning

In the middle of the Venn diagram created by K-12 fears of cheating (see above) and adult fears of a dwindling workforce (more below) sits higher education. Arguably, colleges and universities face the worst of both worlds: fears of AI making college degrees obsolete converge with fears of languishing critical thinking skills or giving students all the wrong information. In response, both AI companies and higher education are leaning into AI use, arguing that students must be ready to use and engage with AI to be truly prepared for post-postsecondary life. (We covered some of these ventures and the New York Times has a great overview, too.) At the leading edge of this trend are initiatives like The Ohio State University’s (OSU’s) new AI Fluency program, which will “embed AI education into…every undergraduate curriculum.”

Programs like OSU’s are, ultimately, a gamble: By the time its 2025 freshman graduate, the workforce may have completely evolved. Even in the best case, it may take years to see whether higher education’s bet on AI literacy (or fluency) will pay off in terms of workforce and life outcomes. But a “wait-and-see” approach is not an option, and higher education has greater latitude for innovation than K-12. The field should keep a close eye on AI’s traction in higher education to see what lessons may be gleaned for younger students.

| Want to learn more about AI in education? Check out Bellwether’s updated “Learning Systems” analyses, which incorporate news from the past 8+ months for a comprehensive picture of the AI-in-education landscape |

In other news:

- Common Sense Media released a comprehensive toolkit to guide administrators in assessing their school district’s AI readiness. For a quick overview, check out this Education Week interview of Common Sense’s senior director of AI programs.

- Also: With the 2024-25 school year officially wrapped up, a recent survey reveals that generative AI is district CTOs’ top-ranked priority, and will continue to be as students and teachers head back to school in August and September.

- And: The Learning Accelerator released a series of resources for state and local education leaders on AI-related topics.

- A new study investigates the ways in which students “trust” (or don’t) AI and theorizes that unlike past ed tech tools, students’ relationship with AI chatbots relies on a unique “human-AI trust” model.

- Y Combinator’s summer request for startups includes a call for founders to build AI tutors and other businesses that could alter the future of education.

- In their “Class Disrupted” podcast, hosts Michael Horn and Diane Tavenner wrapped up their miniseries on AI in education with a reflective conversation on lessons learned, beliefs confirmed, and food for future thought.

- A glitch in technology platform BoardDocs, which stores and disseminates school board officials’ documents, allowed public access to sensitive information from school board meetings, highlighting the cybersecurity challenges inherent to local education agencies’ use of third-party platforms.

The Latest: AI Sector Updates

In our last issue, we covered announcements from IBM and Microsoft regarding automation and layoffs. Generally, the public response to “AI is changing the job market” has skewed one of two ways: Optimists promote reskilling or embedding AI skills in job descriptions (a very optimistic education expert might even see the potential for a more talented teaching corps). Pessimists have been skeptical or resistant (see user backlash to Duolingo’s “AI-first” announcement).

But the call is coming from inside the house: Anthropic’s CEO Dario Amodei warned that AI could “wipe out half of all entry-level white-collar jobs,” and that companies and the government “need to stop sugarcoating what’s coming.” This came on the heels of Anthropic’s first “Developer Day,” where executives and developers emphasized AI agents automating job functions. A recent Semafor article shows that the trend has already started, driven by the uptick of vibecoding, and the New York Times profiled a company whose goal is to “fully automate work…as fast as possible.” These developments foreshadow a wholesale takeover of white-collar jobs, which Amodei believes requires broader, sweeping solutions such as increasing public awareness as well as debating policy solutions for “an economy dominated by superhuman intelligence.”

In other news:

- Mary Meeker and the team at BOND released a trend report looking at the AI sector. The theme of all 340 pages? Speed. To get an overview, you could drop it into ChatGPT — or you could read this interview with Meeker.

- As AI agents are poised to take over the internet, another Sam Altman startup aims to give everyone a “digital ID,” which proponents claim will be crucial to verifying who is human and who is not.

- Anthropic, which bills itself as the most safety-oriented AI company, is getting sued by Reddit for scraping its data long after it had agreed to stop — ironic, given that Anthropic is also one of the only tech companies that did not advocate for the Trump administration to allow models to use copyrighted information for training.

- The latest models and capabilities:

- DeepSeek released a new version of its R-1 (the one that broke the internet in January) — to suspicions that it probably used Gemini as a training tool.

- Perplexity Labs’ new tool offers new capabilities like generating spreadsheets, dashboards, and more.

- France’s Mistral.ai released its first reasoning model, notable for its multilingual reasoning, as opposed to most American frontier models, which reason in English and then translate outputs into other languages.

- OpenAI released o3-pro, a “souped-up” version of its existing o3 that surpasses Google’s Gemini 2.5 and Anthropic’s recently-released Claude Opus 4.

- Finetuning, safety guardrails, and retrieval-augmented generation (RAG) are oft-touted methods of reducing hallucinations and mistakes, and all involve giving the model more training and more information. But a new method might do the exact opposite, by detraining a model and removing problematic data.

- Researchers are also developing an AI model that can fact check … other AI models. #Inception! They are naming the method “Search-Augmented Factuality Evaluator (SAFE),” and hope that it will reduce hallucinations.

Pioneering Policy: AI Governance, Regulation, Guidance, and More

CEOs from top AI and energy companies convened in Washington, D.C. recently to discuss AI’s immediate and long-term energy needs. These conversations included potential data center investments, such as Amazon’s announcement of a historic $20 billion plan to build two data center complexes in Pennsylvania — the state’s largest ever economic project. Policymakers are positioning data center growth as a means to offset job losses caused by AI technology; for example, Gov. Shapiro’s office boasted that Amazon’s investment will create “thousands of good-paying, stable jobs” for Pennsylvanians. In July, Sen. Dave McCormick will host the “Pennsylvania Energy and Innovation Summit,” bringing together leaders from major AI and energy companies, as well as President Trump. McCormick’s office says the summit will “attract new data center investment and energy infrastructure,” to “jumpstart” Pennsylvania’s economy and create new jobs. As AI reduces the number of traditional, high-paying software engineering jobs (see above), enterprising state leaders are betting on the data center boom to fill some gaps or even increase employment.

Yet the Wall Street Journal found that after construction, data centers don’t employ very many people. Moreover, proponents don’t always address the potential community harms that come from building bigger and bigger data centers. While much of policy focus has been on regulating AI technology, this sort of “soft” policymaking will have just as much (if not more) of an everyday impact on constituents, whether it’s through new jobs, changing education requirements, or environmental/health crises. It may be tempting for policymakers to highlight the economic benefits of energy infrastructure, but it’s just as crucial for them to consider the holistic externalities of these policies.

In other news:

- More than 260 state lawmakers — representing a bipartisan group from all 50 states — sent an open letter to the U.S. House of Representatives opposing the reconciliation bill’s proposed AI ban on state regulation (more here).

- After the outcry, the Senate Committee on Commerce, Science, and Transportation drafted an alternative to the House’s provision: rather than banning it outright, states must halt AI regulation or risk losing crucial broadband funding. The new version passed the Senate Parliamentarian’s scrutiny, ensuring that should the bill come to a floor vote, it can pass with a simple majority. Notably, however, the Senate and House must then reconcile the two different versions through additional negotiations.

- As Congress debates the 10-year moratorium on state AI laws and the White House continues to develop its AI Action Plan (rumored to be released this month), this Library of Congress report helpfully outlines other regulatory approaches and options that the federal government might consider.

- Despite the potential federal ban, California lawmakers are moving ahead anyways: the state’s Senate Bill 243 aims to require AI companies and AI-powered social media platforms to implement safety measures against manipulative engagement tactics, state clearly that the chatbot is not human, provide suicide prevention protocols, undergo third-party audits, and allow users to pursue legal action for harms caused by noncompliance.

- Amid this chaos, Multistate.ai notes that state regulations are actually stalling out, with very few AI-related bills making it to governors despite the 1,000-plus introduced in 2025. As state legislative sessions wind down, the lack of nationwide state actions begs the question: Is the proposed federal moratorium on state action actually solving a pressing AI issue at hand?