What characteristics of teacher candidates predict whether they’ll do well in the classroom? Do elementary school students benefit from accelerated math coursework? What does educational research tell us about the effects of homework?

These are questions that I’ve heard over the past few years from educators who are interested in using research to inform practice, such as the attendees of researchED conferences. These questions suggest a demand for evidence-based policies and practice among educators. And yet, while the past twenty years have witnessed an explosion in federally funded education research and research products, data indicate that many educators are not aware of federal research resources intended to support evidence use in education, such as the Regional Education Laboratories or What Works Clearinghouse.

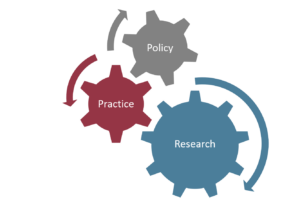

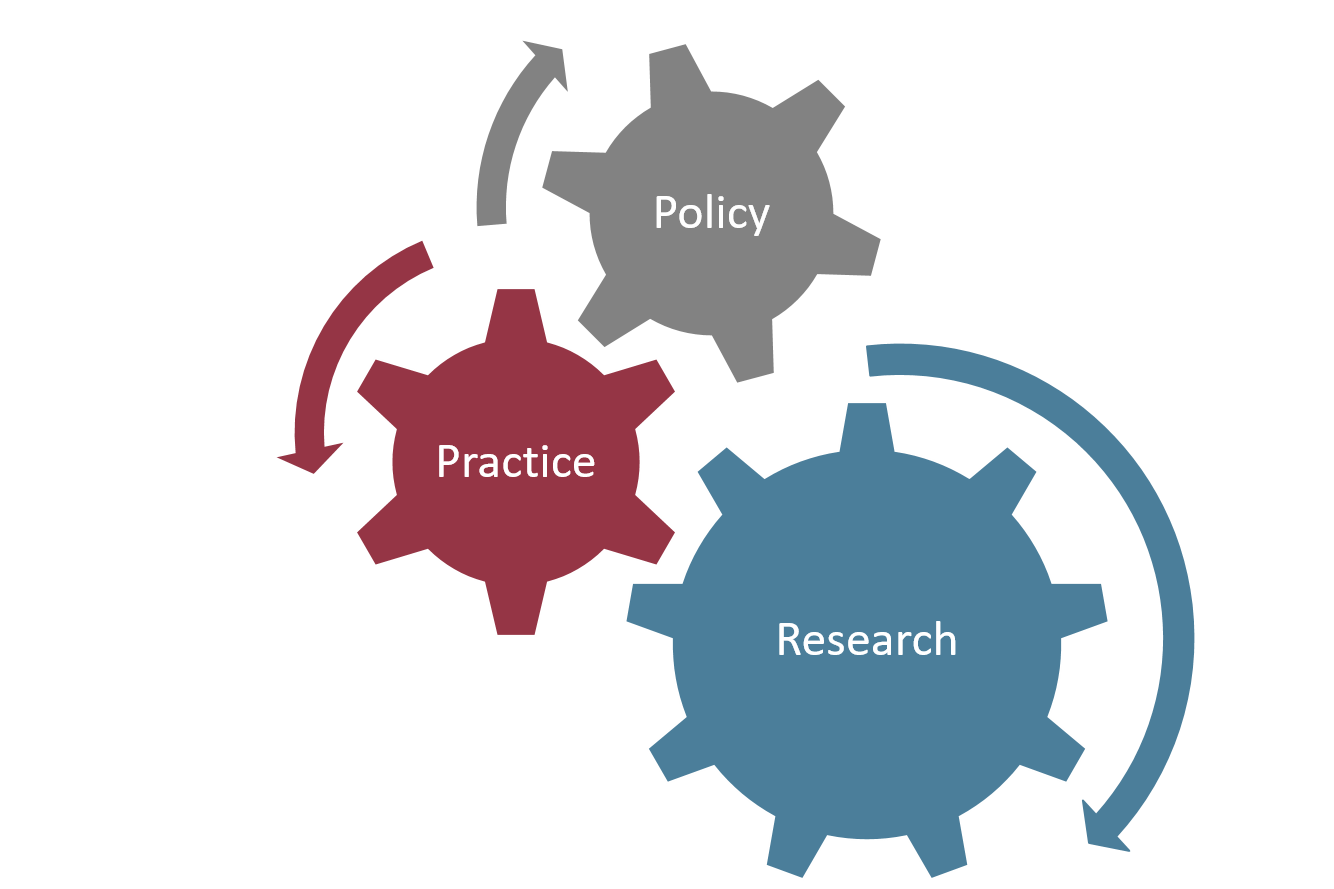

Despite a considerable federal investment in both education research and structures to support educators’ use of evidence, educators may be unaware of evidence that could be used to improve policy and practice. What might be behind this disconnect, and what can be done about it? While the recently released Institute of Education Sciences (IES) priorities focus on increasing research dissemination and use, their focus is mainly on producing and disseminating: the supply side of research.

While supplying and disseminating research is critical, we also need to think about ways to tap into demand for research as a means of encouraging greater research use. Students, parents, teachers, administrators, board members, and community partners all have a stake in understanding and solving education problems and issues. As noted here, engaging with these stakeholders to identify problems to study, develop a set of research questions, and interpret findings will yield more useful evidence for designing and implementing policy. IES should consider incentives for researchers to engage with a variety of stakeholders, learn more about what questions these constituents have, and develop a set of research questions that is responsive to their concerns. If researchers focus on providing evidence that addresses questions education stakeholders have, that evidence will be more relevant to potential users of research.

In addition to increasing research relevance, IES should consider how to increase education stakeholders’ demand for strong research designs. Investing in education stakeholders’ understanding of research design and appreciation for methodological rigor can increase demand for the production and dissemination of research that can address causal questions. Education stakeholders are often interested in whether taking a certain action will lead to better outcomes for students. However, relatively few research studies use methods that support causal inference; much of the quantitative research conducted in education is descriptive and correlational in nature. While descriptive quantitative studies play a critical role in education research, educators need to understand the limitations of correlational research to develop appropriate next steps based on evidence.

As an example of the limitations of correlational research, in my previous role as an evaluator in a school district, I worked on a study that found that students enrolled in accelerated math have greater achievement gains. If the accelerated course curriculum caused the gains, then expanding access to accelerated math would likely lead to better student outcomes. But because we didn’t have a research design that allowed for causal inference, we couldn’t be sure that expanding access to accelerated math would lead to better student outcomes.

Why can’t we assume that expanding accelerated math will work, if we know that the students enrolled did so well? Because it’s also possible that more effective teachers are more likely to teach the accelerated math sections. Peer effects, or the positive influence high-achieving students have on one another, might also explain the gains we observe. If either of those alternative explanations are true, then expanding access to accelerated math might not create the gains in student achievement that we observe. Those gains might reflect what researchers refer to as selection bias, where students and teachers are selectively sorted into accelerated math classes.

Without a clear understanding of whether the research is designed to determine causal relationships, a school principal could mistakenly interpret research findings. Failure to appreciate the difference between correlation and causation is a serious impediment to developing policies that are likely to have a positive impact.

In my next post, I’ll describe some ways researchers and practitioners have collaborated to answer questions that truly address needs in the field and findings that can be used to inform policy and practice.

April 22, 2019

Why Is There a Disconnect Between Research and Practice and What Can Be Done About It?

By Bellwether

Share this article

More from this topic

Preparing Students for Life, Not Just More School: Lessons from D.C.’s Sojourner Truth Montessori

Education Reformers Using Bad Language: “Growth”

Artificial Intelligence Cohort for Education and Youth-Serving Organization Leaders

No results found.